|

|

Jul 20, 2009, 06:33 PM // 18:33

Jul 20, 2009, 06:33 PM // 18:33

|

#21 | |

|

Core Guru

Join Date: Feb 2005

|

I bet you $5 the problem is disk access, and you probably have my ksmod installed too, which will slow down player loads by 4x. GW streams textures on the fly and this is the #1 issue for low frame rates. If you have crappy disk access speeds, a fragmented GW.DAT, or other programs using your disk in the background (bittorrent), you will get low frame rates regardless of your hardware. Every time a player loads into a map, the GW engine pauses while it's texture is generated and then loaded.

GW also does suffer from EvictManagedResources() bugs from time to time (driver errors) where it wipes vidoe memory completely every so often and forces everything to get reloaded. It happens on certain maps when the camera is looking certain directions. Blocking the call to EMR() solves the problem, and so does updating or reverting your drivers to another version. Quote:

His CPU pulls 7 amps overclocked. His mobo, HDD, RAM, fans, another 5 amps. Total pull is 300 watts. And that is being liberal because no game or program can max out all system components at once. 550w PSU's easily run overclocked i7's and 2 GPU's. His old dual core and single GPU setup is no match for even a no-name brand 400w PSU. Last edited by Brett Kuntz; Jul 20, 2009 at 06:44 PM // 18:44.. |

|

|

|

Jul 20, 2009, 07:12 PM // 19:12

Jul 20, 2009, 07:12 PM // 19:12

|

#22 | |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Quote:

Your GPU doesn't "pull amperes", it needs a specific amount at the given DiELG junctions to operate smoothly (this being after conversion and structuring). Amperes measure current, that is electron per point of relation. If you care to argue to semantics of electrical physics, I have a PhD to back me up, and you have...? You think of a PSU as a 2 dimensional object, whereas, in the realm of DC (direct current if you aren't up to date on electrical terms), the PSU serves many more dynamics. Typically, the 12v+ rails are shared as well, because manufacturers are cheap and don't want to build specific capacitor/resistor series, as it costs extra. PSUs are also not perfectly efficient, as nothing in the world is. Best case scenario, you are looking at ~86% efficiency at the PCapacitor, and down the wire?.... maybe 70% or less... not great I am afraid. There is significant iL5 gate leakage in rev0 of the GTX260 and subsequently the GTX280. In addition to that leakage, there are ramp up issues with core to display output. English? That means the card uses and wastes power. This is a fundamental flaw with most first revision cards. While in a perfect world, with no DiELG leakage or e- inversion, sure, maybe (and that is a huge maybe), but real physics doesn't permit perfection I'm afraid...  I will not argue what PSU requirements are. A 550 watt PSU (assuming 2 hard disks, 2 optical drives, Core2 Quad, mid high end motherboard, high speed low latency RAM, and 3+ peripherals, with a GTX260 rev0) isn't really enough unless you had extremely low settings, and even that is stretching it. Now, one could argue that is you setup the system PERFECTLY, using very specific parts, it probably would be. But, in all honesty, his system is not the case in point. Sorry, but until we get superconductive materials perfected, you won't see a PSU that can output near what it should at the wire. This is fact, not fiction. If you want to debate me, fine, by all means. The fact is, with iL5 leakage, and the fact that PSUs and PC electrical systems are FAR from perfect (Ohm can back me up here, as will Kirchhoff in regards to internal complex!) You can find all the data you want, but unless his PSU is running at the maximum theoretical efficiency it can output, and his component are at the most minimal leakage that can be allowed (all his parts are "perfect" off the assembly line), I am afraid it isn't enough. Again, keep in mind, the driver issue is magnified by the lack of power. The problem isn't so much that there isn't enough power, but that there isn't enough power to overcome the driver issues with large texture unpacking.

__________________

|

|

|

|

Jul 21, 2009, 01:04 AM // 01:04

Jul 21, 2009, 01:04 AM // 01:04

|

#23 |

|

Core Guru

Join Date: Feb 2005

|

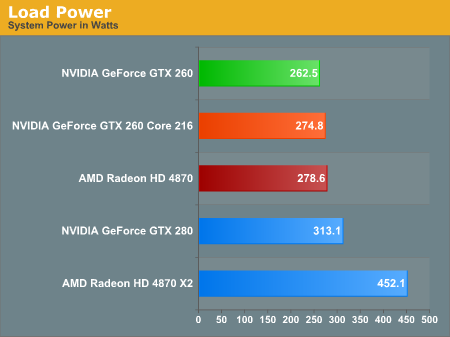

You are incorrect. There is not one article or review on the internet that shows any single GPU setup pulling more than 550 watts from an outlet.

Not. Even. Close. I know you work for nVidia, but you were horribly wrong on memory latencies and why bus-width has a direct effect on the size and quality of a graphics chip. For someone who claims to work for nVidia, your knowledge on even the most basic computer areas is non-existant. I can't really argue against your GTX/PSU post because you basically just posted a bunch of mumbo-jumbo lingo that has nothing to do with my point. It is a fact, no single GPU system, regardless of HDD's, ODD's, Fans, and motherboard, can pull over 500 Watts. The most hardcore Quad's with the most hardcore GPU's pull around 300 Watts during gaming. Hell, this single link proves my entire point: http://www.anandtech.com/video/showdoc.aspx?i=3408&p=9  Note that even the monster GTX 280 only pulls 313 Watts from the wall under load, even on a liberal 85% efficiency PSU that is a total system draw of 266 Watts. A 266 Watt PSU could run a Quad-Core QX9770 Extreme @ 3.2 GHz, 4 sticks of RAM, and a GTX 280. I know many people who run OC'd 4GHz Quad-Cores and 2 GPU's on 550 Watt PSU's and never have issues. Considering 750 Watts PSU's are known to run 4 GPU systems for benchmarking and [email protected].........I'm pretty sure a 550 Watt system can easily handle 1 GPU. |

|

|

Jul 21, 2009, 02:06 AM // 02:06

Jul 21, 2009, 02:06 AM // 02:06

|

#24 |

|

Academy Page

Join Date: Jan 2009

Profession: W/

|

i run the game at 3-7 fps at all times. i cant play most of NF and EotN because my graphics card is not compatible. I'm not sure about factions. so... i am below 60 fps

|

|

|

Jul 21, 2009, 02:27 AM // 02:27

Jul 21, 2009, 02:27 AM // 02:27

|

#25 |

|

Older Than God (1)

Join Date: Aug 2006

Guild: Clan Dethryche [dth]

|

As a dispassionate observer:

Kuntz, you are talking past him, and he's not going to take you seriously until you debate him on the merits. His argument logically boils down to: "pull" doesn't exist as a theoretical construct, but is merely an oversimplification of the physics. As an approximation, it is a useful explanatory tool...except when it does not work. He argues that it does not work in this particular case. You attempt to dismiss his argument as technical mumbo-jumbo, but if you cannot offer a coherent explanation for why "pull" is a valid interpretation of the physics of this problem, you have lost this battle. Your external citation proves a lot less than you think it does. A measurement of system wattage does not address the core question of whether this card getting the power it needs to operate well. You argue that he was technically wrong on two previous issues but offer no proof in either case. Additionally, the reference of JUST your post in the thread where he responded and you did not take up the gauntlet appears intellectually disingenuous. Of course, the only way of actually resolving who is correct would be through resort to empirical evidence. The best advice I can provide the OP is to choose the recommendation you feel to be most accurate, implement it, and report your findings. Only move on to the other recommendation if the initial resolution proves unsatisfactory. |

|

|

Jul 21, 2009, 04:03 AM // 04:03

Jul 21, 2009, 04:03 AM // 04:03

|

#26 |

|

Jungle Guide

Join Date: Jun 2006

Location: Boise Idaho

Guild: Druids Of Old (DOO)

Profession: R/Mo

|

If the op is using a vx550 Corsair PSU it has only a single +12v rail and is certified to be 80% efficent at full load. HardOCP actually awarded it thier rare gold award. The vx550 has not been out very long so I have no clue as to the specific model he is using.

As for the driver issue mentioned by Rahja, that is the primary reason I will not update the driver for my 9600GT or the 7900GS (AGP). The newer the driver, the worse the performance. Shoot, the 7900GS is still using a 96series driver that provides 50+FPS on a 1440x900 monitor. If the OP's PSU is not a vx series, then Rahja it the nail on the head. The previous generations of PSU put out by Corsair were consistantly rated beyound any reason. I have a PSU from Corsair marked 550w with a max power output of 490w. I would be willing to bet that the overclocking he did to the card did not result in a corsponding increase in FPS. If it did, the PSU is good, but I bet that the increase was less than 25% of what was expected. |

|

|

Jul 21, 2009, 04:35 AM // 04:35

Jul 21, 2009, 04:35 AM // 04:35

|

#27 | |

|

Lion's Arch Merchant

Join Date: Jul 2006

Profession: W/

|

Quote:

BTW, This was done with my card overclocked Run #1- DX10 1280x1024 AA=4x, 64 bit test, Quality: VeryHigh ~~ Overall Average FPS: 32.175 Run #2- DX10 1680x1050 AA=4x, 64 bit test, Quality: VeryHigh ~~ Overall Average FPS: 24.895 Run #3- DX10 1920x1080 AA=4x, 64 bit test, Quality: VeryHigh ~~ Overall Average FPS: 21.42 |

|

|

|

Jul 21, 2009, 07:17 AM // 07:17

Jul 21, 2009, 07:17 AM // 07:17

|

#28 | |||

|

Core Guru

Join Date: Feb 2005

|

Quote:

Quote:

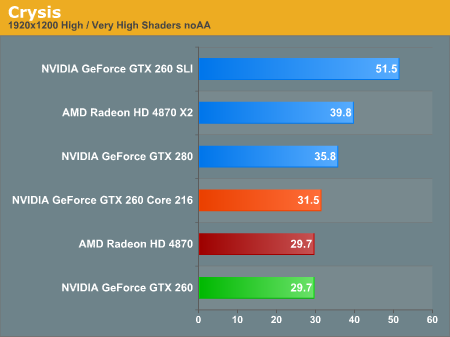

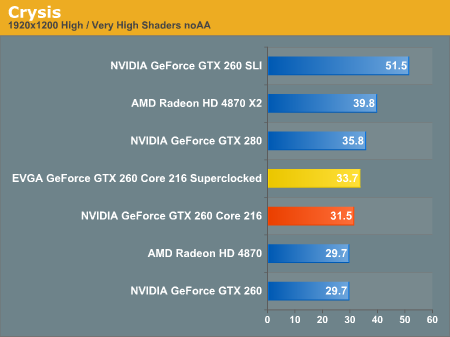

You're basically saying that in homies 550 Watt system, the GTX 260 has some how magically detected it's a 550 Watt PSU, and is purposely pulling less power? Then why is it, in a related article, a GTX 260 is shown to only pull 274 Watts from the wall TOTAL SYSTEM? Did that card magically detect that there was only a 1200 Watt PSU and decide to pull less power too? What kind of special Unicorn Fairy Magic PSU does the GTX 260 need to work at full power then? LOL Quote:

Crysis is one of the most benchmarked games around now, so with a bit of searching, you could probably find someone with a similar system as yours and check your FPS against theirs. |

|||

|

|

Jul 21, 2009, 07:28 AM // 07:28

Jul 21, 2009, 07:28 AM // 07:28

|

#29 | |

|

Jungle Guide

Join Date: Jun 2006

Location: Boise Idaho

Guild: Druids Of Old (DOO)

Profession: R/Mo

|

Quote:

Corsair does make a good product, now. When they started out, they were like everone else, buying someone elses product and putting thier label on it. After looking over the benchmarks posted so kindly by Kuntz, I was advise you back up your driver to a previous version. If you chose to do that you will want to clean off the left overs that the uninstaller will leave behind. Addressing Kuntz assurances that it is not the power supply, WRONG. It still could be. I have seen it happen on my own system. When I upgraded the power supply, I got an improvement of more than 20% in frame rates on the 9600GT. The PSU was the ONLY thing changed. Last edited by KZaske; Jul 21, 2009 at 07:38 AM // 07:38.. |

|

|

|

Jul 21, 2009, 08:26 AM // 08:26

Jul 21, 2009, 08:26 AM // 08:26

|

#30 | |

|

Core Guru

Join Date: Feb 2005

|

Quote:

His PSU is well known for running two 4870's CFX or two 260's SLI. Two GTX 260's in SLI needs about a 400 Watt PSU (if you want to cut it close). Obviously, that why people with two 4870/260's buy 550 Watt PSU's. |

|

|

|

Jul 21, 2009, 08:50 AM // 08:50

Jul 21, 2009, 08:50 AM // 08:50

|

#31 |

|

Core Guru

Join Date: Feb 2005

|

http://www.extreme.outervision.com/PSUEngine

His system, guessing some stats, needs around a 277 Watt PSU. Lets have some fun though, lets see what you can run on a 550 Watt PSU: -E8400 at 100% utilization -GTX 260 216-Core at 100% utilization -4 sticks of RAM -TEN SATA Hard Drives -FIVE Optical DVD Drives -TEN 120mm Fans (For all those HDD's lmao) -Entire system is at 100% utilization all at once (impossible in real world condictions) Wattage needed: 549 lol Edit-Within 10 minutes I found numerous forum posts where people [email protected] on 260 SLI systems with Quad-Cores on 500w and 550w PSU's. And if there is ANYONE on Earth that is anal retentive about Power Consumption vs PPD [email protected]..it's [email protected] junkies. |

|

|

Jul 21, 2009, 09:04 AM // 09:04

Jul 21, 2009, 09:04 AM // 09:04

|

#32 | |

|

Jungle Guide

Join Date: Jun 2006

Location: Boise Idaho

Guild: Druids Of Old (DOO)

Profession: R/Mo

|

Quote:

Edit: I too am a "[email protected] Junkie" that is how I found out that the previous PSU I had in my system was not functioning correctly. Last edited by KZaske; Jul 21, 2009 at 09:10 AM // 09:10.. |

|

|

|

Jul 21, 2009, 12:47 PM // 12:47

Jul 21, 2009, 12:47 PM // 12:47

|

#34 |

|

Frost Gate Guardian

Join Date: Jul 2006

Location: Warrior's Isle

Guild: LF PvP/GvG Guild.

|

|

|

|

Jul 21, 2009, 01:34 PM // 13:34

Jul 21, 2009, 01:34 PM // 13:34

|

#35 | |

|

Frost Gate Guardian

Join Date: Mar 2009

Location: Gwen's underwear drawer

Guild: The Curry Kings

Profession: R/

|

Quote:

While I don't work for nVidia or make other claims about *expertise", I do work in reality and real world experience proves that simply adding up Watts and then making ludicrous claims based on the result is truly dazzling in it's naivity! Total power output for a PSU is about as useless a number as it gets when it comes to understanding if your system components are going to drag your PSU into over-current fail safe hell. Far more important are things like the distribution and number of 12V rails and the devices hanging off them and the current and power available on each of those rails. i.e. is your single 12V rail trying to run your CPU, bus & GPU? I've seen crap 500W PSU's (especially stock OEM PSUs) keel over with single high power GPUs because of poor rail design. Likewise there are numerous high quality lower power rated PSUs that have great designs and can manage under similar loads conditions. ----------- For the OP this really isn't a question of power, it's really just life. Leave Crappy-dan behind venture out and see how your frame rate soars.  @Jessica I have a similar setup and my 4770 charges along at 75fps

|

|

|

|

Jul 21, 2009, 01:59 PM // 13:59

Jul 21, 2009, 01:59 PM // 13:59

|

#36 |

|

Ascalonian Squire

Join Date: Jun 2009

Location: South Africa

Guild: The ZA Illuminati

Profession: Rt/

|

60fps?

I have a AMD X2 5400+, 4gb Ram and a HD4850 keeping an average of 140fps, even in kamadan. 24" LCD 1900x1200 Think some tweaking needs to be done to systems |

|

|

Jul 21, 2009, 02:22 PM // 14:22

Jul 21, 2009, 02:22 PM // 14:22

|

#37 | |

|

Oak Ridge Boys Fan

Join Date: Jun 2007

Profession: E/P

|

Quote:

I know this because finding a decently priced power supply capable of supplying over 250 watts on the 12V at ZipZoomfly was surprisingly hard (they had cheap shipping to Alaska at the time). Rahja, if the Corsair PSU needs to produce over twice of the GTX 260's TDP to run just that card capably, the card is so beyond defective it would've never been allowed out of nVidia's testing labs. To suggest otherwise is a vicious insult against a company I've gladly purchased the following from: geforce 2 TI geforce 6600 GT geforce 9600 GT had my friends purchase, due to my recommendation: 7600 GT 9600 GSO 8800 GTS 320 mb 8800 GT 9800 GT NONE of these had this unhinged, unrestrained power use. It's obvious you know far more about electronics than I do, but what you are saying is completely unsupportable. Last edited by Malician; Jul 21, 2009 at 03:34 PM // 15:34.. |

|

|

|

Jul 21, 2009, 06:34 PM // 18:34

Jul 21, 2009, 06:34 PM // 18:34

|

#38 | |||||

|

Core Guru

Join Date: Feb 2005

|

Quote:

Quote:

Rahja, who claims to work for nVidia, has posted so many untrue and wild claims, I'm not the one with credibility issues: http://www.guildwarsguru.com/forum/s...php?t=10288946 The following are all Rahja quotes: Quote:

Quote:

http://en.wikipedia.org/wiki/Cas_latency Quote:

I have noticed over the last few months he consistently posts misinformation, and people eat it up because he keeps bragging about his summer internship at nVidia. Last edited by Brett Kuntz; Jul 21, 2009 at 06:36 PM // 18:36.. |

|||||

|

|

Jul 21, 2009, 09:17 PM // 21:17

Jul 21, 2009, 09:17 PM // 21:17

|

#39 | |||

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Kuntz... normally I would just delete posts of this inflamatory nature... but honestly, you have taken this one step too far. Insulting my education, belittling my career, and now telling me latency is measured in Hz? ARE YOU OUT OF YOUR MIND?!

First off... the statements made in that RAM guide are correct, albeit vague. In an attempt to make it more viable for average readers, one must use layman. Sorry, but I am not about to go into the specifics of memory timings and operations for the tech area of a Guild Wars forum. I have better things to do with my time, and far far more important things to deal with. Most people wouldn't even bother to read it, muchless understand it. I am glad that you, took the time to read up on exactly how it works. However, latency is always measured in ns, not Hz. The two are inherently linked, I wouldn't dare dispute that, but the standardized measurement for latency isn't clock cycles, it is nanoseconds. Timings across a parallel circuit and a complex circuit are ALWAYS measured in Hz. This will never change. The CAS and RAS are set as clock cycles, and of course speed makes a difference, as it does with total theoretical bandwidth. Clock speed affects all numbers, within a given cell, as that would determine the disparity between low end and high end RAM. You are overcomplicating a generic blanket statement, which is very unwise to do. Timings =/= Latency. Latency is measured in units of time, timings are measured in cycles (Hertz). I never confused the two. I did use the wrong notations at one point (which is where I can see where the confusion came from regarding this whole issue) It is rather apparent though, if you aren't trying to gun me down reading through it, that timings and latency are separate but related terms in the guide. Quote:

Of course, you neglected to read in context, as I clearly stated just below that... Quote:

Oh, but thanks for pointing that out and trying to pick out a statement that suited your purposes... Gee, how nice. Feel free to find more of my posts with layman statements that can be removed and taken out of context. I'm sure it will be a valuable use of your time. Kuntz, you are not keeping in mind a few simple things... Because there is no additional charge being applied to the wiring leading to graphics cards, and there will be some (dependent on area and environment this can be very low or high) EM and ES interference, this can greatly affect total current (Amperes) The wattage of a PSU is irrelevant, as voltage is in a constant state of flux (ala Amperes in relation or volts and watts, OMG physics!) This would, again, affect on wire and at gate current. I'm sorry, but I doubt you understand how forward gates and conversion work in processors, as most people don't (it isn't exactly something you can understand by reading and citing Wiki...) But, in short, energy is lost through each medium it passes through. This electron loss in a processor is known as "leakage". DiELG (Dielectric Gate) leakage is the most common cause for excess heat in a processor of any type. With elevated iL5 gate leakage in the GT200 rev0 chips, this can be seen as power loss. Again... following me here? Coupled with a driver flaw that is currently being worked on (that is processing very large texture files and/or small texture files out of a large file) [in this case, the gw.dat is an EXCELLENT example], power draw will be elevated, but current must remain stable. If there is ANY issue with current (which isn't pull, as you are citing... pull is wattage, which is VERY different), the card will be forced to throttle to pass the texture files through. Overly simplified, yes; correct nevertheless. As for my position at nVidia, I am a Senior Process Design and Physical Design "Engineer". I use the term engineer loosely, as it isn't engineering in the traditional sense, but mainly dealing with process design and sampling, most specifically VSLI design and implementation. Don't deface me please... But the good news for you is!!! I won't be working for NV after October, as I am heading over to TSMC as a Jr. Process Engineer (almost the same job as NV, but more room for career advancement) So then, I guess I won't have claim to the NV throne. You try to glorify my position, but fail to realize that I have the same job that 90+ others do, in this company alone. The fact is... Quote:

If you want, we can chalk this up to a driver issue and call it a day. The driver issue is (in all likelyhood) presenting itself, and that PSU isn't delivering enough current after conversion and e- inversion to correctly power the GTX260. The card itself isn't terribly flawed, it is within operational parameters. The multiplicative issues that present themselves is the issue, not one or the other. You cannot simply pick and choose one possible issue. In all likelyhood, this is a driver/power/current issue; a culminated effect so to speak. And Kuntz... I use my position at nVidia to help people on these forums with hardware related issues. My expertise has helped many, and that is why people trust my opinion. They don't blindly take my advice; they take it because it has been proven effective over the course of 2+ years. Instead of bashing me and trying to find layman, simplified statements to prove me wrong, why don't you post on the merits of your background in electrical physics, and actually provide me proof that Ohm and Kirchhoff are wrong... Kirchhoff's two laws alone prove that my logic is sound... enlighten yourself before you deface me. You are taking this way too personally, and I will not stand for any more flaming. If you want to continue this argument, do so in a non inflammatory manner. Provide evidence aside from total energy pull (wattage) that supports your argument. Wattage means nothing in relation to current needed to overcome a driver flaw (that again, is being corrected). Trace leakage, gate leakage, latent loss, electron inversion, converts, etc all play into total power. If there are enough flaws, the current will become instable/too low to compensate for this driver issue, resulting in performance issues. Sorry, this is the way of things Kuntz. I am but the messenger, not the creator.  But thanks for pointing out the notation issues. I just went ahead and removed the ns from those, as it was totally unintentional. I do make mistakes when posting 3 page essays, sorry. As you clearly have shown, I do make mistakes from time to time in my posts, especially when the original version of said posts are made at 01:49, 8th Jul 2008 (That's nearly 2am) So again, I made a notation error on numbers (and it wasn't just on timings after reading through it). I went ahead and corrected the errors that I found in the post just for you. I am human, I do make mistakes, but my knowledge on the subject is sound. But thanks for pointing out the notation issues. I just went ahead and removed the ns from those, as it was totally unintentional. I do make mistakes when posting 3 page essays, sorry. As you clearly have shown, I do make mistakes from time to time in my posts, especially when the original version of said posts are made at 01:49, 8th Jul 2008 (That's nearly 2am) So again, I made a notation error on numbers (and it wasn't just on timings after reading through it). I went ahead and corrected the errors that I found in the post just for you. I am human, I do make mistakes, but my knowledge on the subject is sound.

__________________

|

|||

|

|

Jul 21, 2009, 09:38 PM // 21:38

Jul 21, 2009, 09:38 PM // 21:38

|

#40 |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

This needs to be separated from the wall of text above, as it is rather important in finding a solution for this issue.

Hey_homies, I can try to craft a custom vBIOS for you. In order to do this, I need you to create a copy of your vBIOS so I know exact model, revision, and manufactuer of your card. I might be able to balance the delta multiplier in the card, which should balance performance out in Guild Wars. I've done this for several others, but know that it still carries some risk. If you would like to try this as a possible solution to the issue, just let me know. As for two other solutions to help alleviate driver issues. Head to your nVidia Control Panel. Under the 3D Settings category, you will see Manage 3D Settings Go the the Program Settings Tab Select Guild Wars (if it isn't there, navigate to it using the "Add" function. Make sure you add gw.exe and not a shortcut or other file) Make sure Extension Limit is set to ON. Multi Display/Mixed GPU Performance is set to Single Display Performance Threaded Optimization is set to OFF Maximum Pre-Rendered Frame is set to 2 Give those settings a try and see what happens.

__________________

|

|

|

|

|

«

Previous Thread

|

Next Thread

»

| Thread Tools | |

| Display Modes | |

|

|

All times are GMT. The time now is 05:22 AM // 05:22.

Linear Mode

Linear Mode