|

|

Jul 27, 2009, 02:27 AM // 02:27

Jul 27, 2009, 02:27 AM // 02:27

|

#41 | |

|

Guest

Join Date: Oct 2008

|

Quote:

I'd like to see a similar graph for XP, we all know Vista was Microsoft's idea of a bad joke. Or, at least, I hope it was. |

|

|

|

Jul 27, 2009, 03:01 AM // 03:01

Jul 27, 2009, 03:01 AM // 03:01

|

#42 | |

|

La-Li-Lu-Le-Lo

Join Date: Feb 2006

|

Quote:

|

|

|

|

Jul 27, 2009, 06:03 AM // 06:03

Jul 27, 2009, 06:03 AM // 06:03

|

#43 | |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Quote:

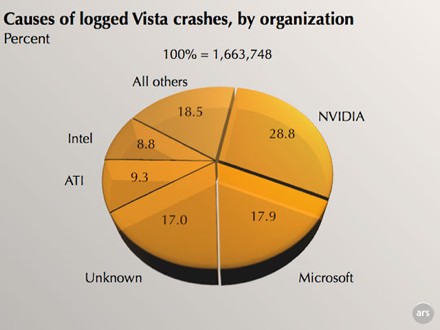

The issue with the mobile divisions processors wasn't solder joint issues... it was fault cobalt doping. The solder issue you speak of was a completely different issue, and that was a result of our board partners, not us. The faulty cobalt doping resulted in higher than normal heat fluctuation, and then compounded with a soldering method that didn't compensate for that, things crumbled into that bad situation. The cobalt doping issue is why we took a one time expense to fix the issues. We made good on fixing the issue, so it is really point moot. We never lied about the issue, but we did argue the end reason for the issue. People act like we released a product knowing full well that would happen. That is the farthest thing from the truth, and it is really insulting that you even suggest that. The people I work with are outstanding individuals that are working to advance the graphics industry, not ruin it. Suffice to say, this nVidia bashing is rather silly. As for that driver bashing... considering the lack of information leading up to Vista's release from Microsoft, it is no surprise the larger driver package has substantially more crashes. Our drivers are much more complex than ATi's, which leaves more to go wrong. It also serves to reason that we, a company with 2x the market share as ATi, would have approximately 2x the number of crash reports. The fact is, the appalling number of graphics driver related issues is a clear indicator of Microsoft's failure to distribute driver code and protocol to either company in the early phases of Vista. It is a shame you didn't think of market share before posting that... I also find it very disappointing you failed to mention that that graph isn't indicative of total time, nor in any given time frame. It stands to reason that as driver issues were corrected. Oh, and not to mention, that vague pie chart really tells us nothing, since it isn't specific (We produce many many chipsets as well, which are widely used, especially back during 2007, and so mainboard driver issues would account for a large percentage of that as well) It took thousands upon thousands of man hours to fix issues after the fact, because Microsoft supplied little to no information in the early stages of Vista driver footprint development, aside from other areas. While there is no denying our drivers initially had many many issues with the Vista operating system, our driver development teams have been working endlessly to fix them. Within the last 9-12 months, dramatic improvements have been seen, and acknowledged by the tech community. It is unreasonable and absurd to suggest that without proper guidance from the OS's creator, that a 3rd party can hope to make flawless drivers. The shame for driver failures in regards to Vista belongs solely to Microsoft, and Microsoft only. Now, as for your opinion on chip design... the large on chip structure of GT200 was simply a starting point for the course we planned to take. GT200 and its sister Tesla units were released during GT300 engineering, that much is true. The large chip design does have drawbacks, but the largest chip you have seen was GT200, not GT300. We have produced a much smaller package with GT300, while ATi's chip has grown substantially. While you can expect ATi's offering to smaller in physical size, do not expect it to be nearly as small as the previous generation. They effectively doubled the amount of shader units, which isn't even mentioning other additions to the chip. The 40nm process TSMC already has low yields, and that is unfortunate. Do not believe for one second that ATi isn't having yield issues of their own. You are also forgetting a very important fact: GT300 is radically different in design from GT200. You are attempting to compare an architecture that used similar technologies from G80+ to an entirely new architecture, similar in no way to anything you have seen in history. While your simple breakdown of memory layout and bus design is sound, it is still very simplified. While I cannot cite specific numbers, you can expect memory bandwidth on GT300 to exceed 225GB/sec. This grandeur that people spew about ATi has been going on since RV600. Suffice to say, they have been wrong the last 2 generations about ATi completely destroying us in performance. ATi had the upper hand in production costs last generation, but that gap is going to be far more narrow this round. ATi is riding on their RV700 architecture, we aren't. AMD is in deep financial troubles, we aren't. I certainly don't hope their product fails; I hope it is a gleaming success. Competition spurs innovation and benefits the customer most. However, please try not to throw all your hopes into a company that is the same as its competition. Both companies are out to innovate, and to profit doing it. It would be unwise to support one blindly and throw stones at the other. In the future, if you care to bash any company or product for any reason, you should know one thing. It stands to reason to post data that is specific, being that is nature of the tech industry as a whole. Posting generic data with no real specifics is naive, silly, and only goes to point out your hatred or malice for Company A in respects to Company B. I have proved on many occasions to be unbiased, recommended ATi for many builds. It is extremely distasteful that I am now seen as some nVidia Knight, simply in light of my soon to expire position within the company. I would hope that in the future, if you care to deface a company or a product, you provide extensive, precise, and accurate data that highlights the specifics of your argument instead of sloshing a bucket load of generic data onto the dart board and hoping your dart hits near the bulls eye.

__________________

|

|

|

|

Jul 27, 2009, 11:23 AM // 11:23

Jul 27, 2009, 11:23 AM // 11:23

|

#44 |

|

Desert Nomad

Join Date: Sep 2006

Location: Officer's Club

Guild: Gameamp Guides [AMP]

|

hey you guys

i used nvidia thru the 6xxx ond up til the 7600 series - now i have ati 4670 is there a temperature monitor program or something for this card? nvidia had a temp / fan monitor so i could watch my gpu cook with bf2 (i actually build computers but prefer working on harware rather than the software end of things) Last edited by N E D M; Jul 27, 2009 at 11:25 AM // 11:25.. |

|

|

Jul 27, 2009, 11:39 AM // 11:39

Jul 27, 2009, 11:39 AM // 11:39

|

#45 | |

|

So Serious...

Join Date: Jan 2007

Location: London

Guild: Nerfs Are [WHAK]

Profession: E/

|

Quote:

|

|

|

|

Jul 27, 2009, 11:56 AM // 11:56

Jul 27, 2009, 11:56 AM // 11:56

|

#46 | |

|

Guest

Join Date: Oct 2008

|

Quote:

Any problems I've had I've had with pretty much both, mostly due to user error or a motherboard manufacturer leaving out a set of cards that wasn't compatible. I've easily spent 50$ on Advil alone because of that  (All experiences here were under Linux, by the way. Haven't used an ATI card in a Windows box yet.) Last edited by Killamus; Jul 27, 2009 at 11:58 AM // 11:58.. |

|

|

|

Jul 27, 2009, 05:33 PM // 17:33

Jul 27, 2009, 05:33 PM // 17:33

|

#47 | |||

|

Core Guru

Join Date: Feb 2005

|

Quote:

nVidia out-right lied about the entire issue. This isn't even a wild claim or some conspiracy, it's proven true by the INQ using science in the form of numerous engineers and technology. Quote:

http://www.theinquirer.net/category/...rlie-vs-nvidia Quote:

How can something less than half the size with millions of wasted transistors beat an nVidia chip in performance? Because ATI concentrates on just graphics. nVidia has been pushing this mutli-use GPU crap for years, and it's not taken off. It wont take off either. And even if it does, it has nothing to do with gaming or performance. ATI's been making DirectX 11 cards since the HD 2900, they've been making 40nm cards since earlier this year, and they've been working with GDDR5 for over a year. nVidia hasn't touched DX11, still hasn't began manufacturing with 40nm, and still hasn't began manufacturing with GDDR5. And you think you stand a chance against ATI this round (58xx vs GT300)? Last edited by Brett Kuntz; Jul 27, 2009 at 06:04 PM // 18:04.. |

|||

|

|

Jul 27, 2009, 08:54 PM // 20:54

Jul 27, 2009, 08:54 PM // 20:54

|

#48 | |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Quote:

I find it laughable that you even cite the Inquirer for a source. After this post, I'm done even arguing this or any other similar topic, seeing where you get your "news" from. It is pointless to argue with anyone who actually cites that man (Charlie) as a source, seeing as how he is the most bias, most anti nVidia, anti physics, anti cGPU, anti everything else our company tries to do person to ever grace the universe. It's like citing a vegan for a study on meat... the logic is so totally flawed and backwards... perhaps if he took his head out of his ass for 10 seconds, he might actually be worthy of being, at best, a FOX News "reporter". Your math on transistor count is wrong. Total die area is not directly proportional to process nor platter area. Primary and co process design is key here. You have to take into account cache levels, chip layout, access areas, and unit type. You aren't including any of that in your "math", and neither does Charlie (how interesting!). You are only thinking of area, not density. Transistor density and implementation is far more critical here compared to chip area. The doping methods for the 40nm node are radically different, and more heavy. This drastically cuts into transistor density, and that is a huge factor you have chosen to ignore (I have no idea why...) Our company is not filled with bumbling idiots you would like to believe it is. nVidia is an extremely creative, brilliant company with the foresight and hindsight needed in this industry. The people I work with are unbelievably intelligent, and some of the best in their respective fields. We are not about the engineer a chip that loses the company millions of dollars as you suggest, that isn't competitive, and isn't a value to the customer segments we are targeting. We are also not in the business to be giving, just as ATi isn't. If you actually believe GT300 is going to be the failure Charlie paints it as, you are lost. GT300 is arguably the most powerful processor on the planet, and it will show that to you and all the critics. I will be leaving nVidia prior to its release in all likelihood, and I will get to watch and comment on its issues and successes as a third party, just like you. You can be assured, GT300 isn't some fat, inefficient bumbling beast that has no real direction. It will be the top performing card; I have no doubts of that. There are not millions of wasted transistors in ATi's design either... you are off your rocker if you think ATi would ever waste that many transistors. ATi's chips are streamlined graphics powerhouses, with no waste. They are like a light heavyweight boxer, trim and fast. Our chip takes a different approach, and GT200 was but a test of that; GT300 will show the true side of our approach. Here is some news for you... GT300 is so radically different from GT200 in architecture and design implementation, you can't even logically compare them. You are trying to compare a motorcycle against a car.... you don't do that... Charlie attempted to compare GT300 to Larabee... that is just hilarious and outright stupid. He is an idiot, and taking anything he says to heart is just as stupid. He is already known to be the laughing stock of the tech reporting community. Did you know he is blacklisted by over 28 companies? No? Oh, well now you do. They won't talk to him, they won't give him any info. Why? For the simple reason that he twists and skews information to the point that it no longer is anything logical; he comes to his own deluded conclusions based on some sick belief that ATi is a good, righteous company that isn't in the business to make money... Here is some really bad news for you... if ATi holds to that release date, and we (nVidia) continue to have yield issues with the TSMC 40nm process... guess what ATi is going to do with their prices... they are going to raise them to kingdom come. Why? Oh, because they can! With no competition, ATi will scrape up every single dollar they can to patch their already terrible quarterly reports. You think nVidia is the only company that does that? HA HA HA. You just wait... come the end of October, you will all be complaining that the prices of the new ATi cards is outrageous, just like you did with GT200 when it released. Companies are not here to please you, they are here to make money. Try to remember that before you go fanboy mode. Aside from your Inquirer sources, all written by Charlie, is there any other actual source you would like to cite? It won't matter, I am done arguing on behalf of the company that employs me. If I was able to give you data that I have, I would. Unfortunately, I enjoy my steady paychecks and am not about to break an NDA to appease you; one who cannot be appeased as it is. As for those that still agree with the driver report numbers, here are some interesting things you failed to know about those numbers:

Now, this will be my last post on this subject, as it is fruitless to continue to argue with someone with as little to no scope of the industry as you. So, bash away! Here is your chance to post all the misinformation you want, so take this opportunity and grind nVidia into the ground. But know this, I will lock any thread that complains about ATi's high cost cards when they release; this has never been the place for QQ threads, and it won't start to be.

__________________

|

|

|

|

Jul 28, 2009, 06:30 AM // 06:30

Jul 28, 2009, 06:30 AM // 06:30

|

#49 | ||||||

|

Core Guru

Join Date: Feb 2005

|

Quote:

Quote:

Quote:

With this round, both chips are using GDDR5, so I think things will be closer, but I also know ATI will "win". ATI may not have the single fastest chip, but that doesn't matter. People don't look at that anymore, or care. Who cares if nVidia's chip, twice the size of ATI's, is 2% faster in benchmarks. People aren't willing to pay $200 more for that chip. People are no longer willing to pay hundreds of $$$ for 2%. You claim nVidia will have the fastest chip, which I think everyone is expecting anyways, but it wont have the best chip. Quote:

Quote:

Quote:

What ATI will do, however, is release a very inexpensive chip, because they can, since there chip is tiny and cheap to make, and they've been stock piling 1GB GDDR5 for quite some time. They will do this, because everyone who buys ATI in September 2009 wont buy nVidia in March 2010. ATI could only lose if they released expensive cards. There is no strategy in releasing expensive cards, especially when ATI's cards are so cheap to manufacture due to their tiny die size. |

||||||

|

|

Jul 28, 2009, 01:17 PM // 13:17

Jul 28, 2009, 01:17 PM // 13:17

|

#50 |

|

Furnace Stoker

Join Date: Apr 2006

Location: Cheltenham, Glos, UK

Guild: Wolf Pack Samurai [WPS]

Profession: R/A

|

because Windows 7 s released in October and it comes with DX11 and Microshaft say that Vista will be updated with DX11 aswell, however XP won't as just as with DX10 it wouldn't work on some of the back end code of XP

|

|

|

Jul 28, 2009, 06:53 PM // 18:53

Jul 28, 2009, 06:53 PM // 18:53

|

#51 | |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Quote:

In the world of fantasy land, where businesses that are in the red and have an advantage don't price gouge, sure! Unfortunately, we don't live in fantasy land, we live in the real world. ATi is in dire straights, and they will price gouge like crazy having no competition. ATi isn't holier than any other company, and any other sane company would do the same. Oh, and as I mentioned previously... ATi's newest upper tier cards don't have the smallest die size in the world... unfortunately. Theirs got significantly larger, ours shrank. They are riding their architecture... it will work this generation, but then it's game over. I hope for your sake, ATi suddenly becomes more interested in making people happy than profits... but the truth is, any company that does that goes under. ATi cannot afford to be nicey nice to their customers. If they alienate them, who cares? You either buy a new ATi DX11 card, or you go without. Not much of a choice...

__________________

|

|

|

|

Jul 29, 2009, 12:11 AM // 00:11

Jul 29, 2009, 12:11 AM // 00:11

|

#52 | |||

|

Core Guru

Join Date: Feb 2005

|

Quote:

Quote:

Quote:

When the 4870 and 4850 first came out, nVidia had nothing to compete against them with, and ATI still made them affordable for all. |

|||

|

|

Jul 29, 2009, 12:30 AM // 00:30

Jul 29, 2009, 12:30 AM // 00:30

|

#53 |

|

Technician's Corner Moderator

Join Date: Jan 2006

Location: The TARDIS

Guild: http://www.lunarsoft.net/ http://forums.lunarsoft.net/

|

The results are out for graphics shipments and market share in the second quarter of 2009 and AMD appears to rule the roost.

That's according to Jon Peddie Research - the company specializes in tracking the graphics market and that's a barometer of the entire PC market. In the latest report, JPR says that the channel - that is to say the distribution and reseller market - stopped ordering graphics cards and made sure their inventories of existing cards were depleted and hoped the first quarter of this year would be better. The first quarter showed some improvement but the second quarter was very good for the vendors like AMD, Intel and Nvidia. That. says JPR, means the channel is gearing up for a healthy Q3, traditionally a time for good sales. And now for the figures. Shipments jumped to 98.3 million units, quarter on quarter, year on year. This is the shape of the graphics chip market according to the market research company. The company cautions that things won't return to the normal seasonal pattern until the third or fourth quarter of this year and the market won't hit the levels of 2008 until 2010. The PC market will be helped by Apple Snow Leopard and Microsoft Windows 7, plus AMD and Nvidia will bring in 40 nanometer designs with better performance at "surprisingly aggressive" prices. The worst is over and there is pent up demand, hopes JPR. Article |

|

|

Jul 29, 2009, 05:14 AM // 05:14

Jul 29, 2009, 05:14 AM // 05:14

|

#54 |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Tell me, all knowing Kuntz, what is the platter and die area of AMD's next gen chips and the area of their current chips?

Tell me all knowing Tarun, who has a higher overall market share right now, AMD or nVidia? Let's let you answer some direct questions for once. This is pretty funny... you condemn one company for practicing cGPU technology from one side of your mouth, then turn around and praise another? Wow... great work there! Larrabee is hilarious, at best. It is going to be the biggest flop the graphics card industry has seen in years, and the laughing stock of the the tech industry as a whole. If you actually knew anything about its architecture and Intel's pipe dreams with regards to that, you would laugh too. Sorry, now I am really done with this conversation! LOLOLOLOL This is too much! I told a friend of mine who actually works for ATi's chip integration division that you said that, and he laughed too. Absolutely classic example of naivete; assuming because something sounds good in context, it will be popular and work. Communism sounds great on paper too, but we all know how it plays out in real life....

__________________

|

|

|

Jul 29, 2009, 08:37 AM // 08:37

Jul 29, 2009, 08:37 AM // 08:37

|

#55 | |

|

Core Guru

Join Date: Feb 2005

|

Quote:

|

|

|

|

Jul 29, 2009, 11:18 AM // 11:18

Jul 29, 2009, 11:18 AM // 11:18

|

#56 |

|

Desert Nomad

Join Date: Nov 2005

Location: Wales

Guild: Steel Phoenix

|

I think both ATi and nVdia have decent enough drivers, i own a PC with a 8800GTX and one with a HD4870 both running windows 7 x64 and ive had no crashes in the last 2 months which is impressive considering the operating system.

The only serious driver problem i've had in the last year or so has been with sound equipment, asus onboard sound chips and creative soundcards are terrible for driver support. I think its unfair to judge a company by crash statistics, most crashes are due to the end user being retarded, the majority of computers are so full of crap like search bars, "freeware" apps and other software designed to infest a plebs PC that its a wonder they still boot up. Also il add a +1 to the shoddy game design argument, game programmers tend not to be the most talented people, STALKER is a shining example of letting clueless imbeciles infront of a computer. |

|

|

Jul 29, 2009, 02:03 PM // 14:03

Jul 29, 2009, 02:03 PM // 14:03

|

#57 |

|

Wilds Pathfinder

Join Date: Jul 2008

Location: netherlands

Profession: Mo/E

|

ow ow nvidia, the die size of an HD4890 is 292 square mm

and the die size of the GTX285 is 490 squae mm and yes nvidia got a bigger share of the market, 29.2 % and ati got 18.4 but the share of ati is going up, and nvidia is going down. that can change, but if ati got there directx11 cars 2 or 3 months faster on the market than nvidia that share is going up for ati. there are still people who buy a new VGA card every year if directx11 arrives there will be die hard gamers that switch to ati. not so much, but they are willing to pay a nice price for it. about the larabee, dont know, maybe its ok, maybe it sucks ass. |

|

|

Jul 29, 2009, 08:54 PM // 20:54

Jul 29, 2009, 08:54 PM // 20:54

|

#58 |

|

über těk-nĭsh'ən

Join Date: Jan 2006

Location: Canada

Profession: R/

|

AMD's RV800 series chips will probably be significantly bigger. if memory serves me correctly (and the rumor source i read was correct), it will have around 2000 stream processors. if there's no significant architecture change, then the RV800 chip will probably end up around 350 mm^2 at least.

anyways, i think it's unfair to criticize one company and not the other. i love my radeon cards, but they haven't exactly been bug free. my HD4850 shipped with buggy vBIOS, and would routinely get stuck in low power 3D, until i flashed it with a fixed vBIOS. both my HD4850 and HD4890 cannot handle overclocking and running dual monitors at the same time. more specifically, the entire HD4800 series cannot handle dual monitors if the vRAM frequency changes. for the HD4850, it will never leave its max frequencies if two monitors are attached. AMD managed to get around this problem in the 4890 by making the vRAM never underclock, which accounts for the 4890's high idle power consumption. |

|

|

Jul 29, 2009, 09:35 PM // 21:35

Jul 29, 2009, 09:35 PM // 21:35

|

#59 | |

|

Core Guru

Join Date: Feb 2005

|

Quote:

The 5870 will likely be: -An 8.5 " long card -Require just one 6-pin connector -180mm^2 die size (4870 was 260mm^2, nVidia's was the size of the Titanic) -Just over a billion transistors http://www.techpowerup.com/75586/Pow...s_in_2009.html The reason is simple. ATI figures if you manufacture a really cheap chip, most people will buy it, since the cost  erformance ratio will destroy anything nVidia's 550mm^2 chip could ever dream of. Then for the enthusiast/performance croud, they simply start gluing cheap chips together until they have enough to win some benchmarks. And it's going to work out quite well, I really think they've outdone themselves this generation. erformance ratio will destroy anything nVidia's 550mm^2 chip could ever dream of. Then for the enthusiast/performance croud, they simply start gluing cheap chips together until they have enough to win some benchmarks. And it's going to work out quite well, I really think they've outdone themselves this generation.

|

|

|

|

Jul 29, 2009, 09:45 PM // 21:45

Jul 29, 2009, 09:45 PM // 21:45

|

#60 |

|

über těk-nĭsh'ən

Join Date: Jan 2006

Location: Canada

Profession: R/

|

ah, i guess my source was really off then. however, are we sure that picture is of the RV870, and not a more mainstream chip (RV840)?

i'm quite familiar with AMD's "small chip" strategy. however, using a dual GPU solution for the very high end can have its drawbacks. the HD4870x2 seems to be completely at the mercy of drivers; if a game doesn't have a crossfire profile, it won't gain the performance benefit. of course, i still remember the HD4850 debut, and its +105% crossfire scaling in COD4. yikes. |

|

|

|

|

«

Previous Thread

|

Next Thread

»

| Thread Tools | |

| Display Modes | |

|

|

All times are GMT. The time now is 05:19 AM // 05:19.

Linear Mode

Linear Mode