|

|

Jul 24, 2009, 04:58 AM // 04:58

Jul 24, 2009, 04:58 AM // 04:58

|

#21 | |

|

Ninja Unveiler

Join Date: Jun 2005

Location: Louisiana, USA

Guild: Boston Guild[BG]

Profession: W/Me

|

Quote:

DX10's failure was all Vista's bad rap due to the press chewing it up. Regardless there were a couple of good games that looked and played better in DX10. DirectX 11 is largely backwards compatible to DX10 hardware so there is no need to shell out for a new graphics card if its not that old unless you just got to have Shader Model 5.0 and Hardware Tessellation that badly. A lot of DX10 ATI cards already have a tessellation capability anyway. Hopefully with Windows 7's success, people can finally see game makers take full advantage of the API instead of just God Rays, higher textures and volumetric smoke. |

|

|

|

Jul 24, 2009, 09:07 PM // 21:07

Jul 24, 2009, 09:07 PM // 21:07

|

#22 |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

There is a lot more to the story when it comes to us not adopting DX10.1

It wasn't so much an issue of us being lazy or stiffing the customer. It was purely for the reasons that DX10 was seen as roadblock to R&D, and the feature sets and runtime code were presented too late (if at all) to our development teams. By the time Microsoft actually allowed a full code release of Vista and DX10, it was too late. Blame Microsoft for that one. They have taken a different approach with Windows7, and it will show in card release timing, driver stability, and support by game developers this time around. Thankfully, Microsoft got a clue about how to present code and deal with Ring0 implementation. You can't just drop your baggage at the developers doorstep and say "pick that up would you?" They learned their lesson, and are taking steps to fixing it. As for driver issues... Let me break some shocking news on that front. We and ATi are fed up with game companies expecting us to add in driver fixes for their shady game engine code. A few companies that come to mind are Activision, Blizzard (by far the worst...), EA Europe, Capcom, LucasArts, Rockstar, and Bethesda. All of these companies are notorious for having significant amounts of holes in their code that they expect us to fill, because they can get away with it. There is an unbelievable amount of driver fixes for WoW, Oblivion, and GTA4 to name a few (we are talking millions of lines of code per game) This isn't just us, but ATi as well (though I don't know ATi's specific numbers). Game developers need to take more responsibility for their shady coding techniques and the shortcuts they take that our driver teams have to attempt to clean up. If you play WoW, for example, you would know how terrible Dalaran is coded... and the vast majority of Northrend for that matter. But, not all developers are known as bad eggs when it comes to code. Want to know one of the best companies in the world for engine design? iD Software! They make impeccable engines that require little driver optimization. More developers should take some lessons from companies like iD and Epic Games (developers of the Unreal engine). As for nVidia in this whole launch issue: We are having some issues with our chip design and TSMC's 40nm process; it's true. Yields are low (I cannot discuss details on numbers at this time), and there isn't much we can do about it but do an A2 spin. This issue will be corrected, and we think that GT300 will prove to be an excellent purchase option for Windows7 users who want to get the most out of their OS and gaming experience. Suffice to say, ATi is experiencing similar yield issues, but I cannot and will not speak for their release time frames. They have a radically different chip design, with more PCB focus; whereas GT300 is more chip centric. It is our belief that GT300 will prove to be the best card you can buy, and will provide more than enough performance for even the most demanding enthusiast. In a nutshell... we are confident that GT300 will prove to be an excellent solution and will take better advantage of the Windows7 environment while taking next generation gaming to the next level. Because, we have a chip that can do more than just graphics.

__________________

|

|

|

Jul 24, 2009, 09:23 PM // 21:23

Jul 24, 2009, 09:23 PM // 21:23

|

#23 |

|

über těk-nĭsh'ən

Join Date: Jan 2006

Location: Canada

Profession: R/

|

i have to agree about bethesda. fallout 3 and oblivion are possibly the buggiest games i've ever played (even though they are also the two of the greatest games ever created. go figure).

|

|

|

Jul 24, 2009, 10:21 PM // 22:21

Jul 24, 2009, 10:21 PM // 22:21

|

#24 |

|

Core Guru

Join Date: Feb 2005

|

Fallout 3 was awesome, easily my favorite game in a long time! I just bought it yesterday and am going to play through it again (try-before-you-buy ill downloaded it for my first playthrough!).

And the driver support issue will never go away, because designers create horrible engines, then it leaves room for one driver company to create a special mod, just for that game, that raises frame rates, then that company gets to raise their arms in the air and scream "our GPU is faster....see here's proof!" and then the other company needs to do the same in order to not look like they have a poor performing GPU. And in some cases, these optimizations decrease image-quality, which is "cheating" frame rates. And both companies were caught cheating/lowering image quality in 3D benchmarks like 3dmark06. =\ |

|

|

Jul 24, 2009, 10:22 PM // 22:22

Jul 24, 2009, 10:22 PM // 22:22

|

#25 | |

|

Krytan Explorer

Join Date: Jan 2007

Location: Ohio, usa

Guild: none

Profession: Mo/

|

Quote:

Last edited by Blackhearted; Jul 24, 2009 at 10:24 PM // 22:24.. |

|

|

|

Jul 24, 2009, 11:08 PM // 23:08

Jul 24, 2009, 11:08 PM // 23:08

|

#26 |

|

Wilds Pathfinder

Join Date: Jul 2008

Location: netherlands

Profession: Mo/E

|

aw well, i got an second had HD4870*2 for 150 euro so i will wait until i can get an HD5870 or something for 150 euro.

but my HD4870*2 plays everything very good atm. and for drivers, ati control center sucks, nvidia control center sucks, but for ati you can download drivers only (20MB)

|

|

|

Jul 24, 2009, 11:11 PM // 23:11

Jul 24, 2009, 11:11 PM // 23:11

|

#27 |

|

über těk-nĭsh'ən

Join Date: Jan 2006

Location: Canada

Profession: R/

|

nvidia's control panel is better, purely because you can have profiles binded to individual applications. CCC does not have this ability. i could go and get antitraytools, but i would really appreciate it if the official software can give me that ability.

|

|

|

Jul 24, 2009, 11:17 PM // 23:17

Jul 24, 2009, 11:17 PM // 23:17

|

#28 | |

|

Core Guru

Join Date: Feb 2005

|

Quote:

|

|

|

|

Jul 24, 2009, 11:19 PM // 23:19

Jul 24, 2009, 11:19 PM // 23:19

|

#29 |

|

über těk-nĭsh'ən

Join Date: Jan 2006

Location: Canada

Profession: R/

|

that's not actually what it looks like. it does basically the backward version of what i want: it loads an application IF i load a profile. i want it to load a profile when i load an application.

|

|

|

Jul 24, 2009, 11:23 PM // 23:23

Jul 24, 2009, 11:23 PM // 23:23

|

#30 |

|

Core Guru

Join Date: Feb 2005

|

You can either Hotkey a profile, create a desktop icon (which in turn executes an application/game), or use the little right-click menu. I am not sure why they do not include Application Detection out-right though, but I am pretty sure they did at one point in time. I've always just used defaults for the most part, and in-game options.

|

|

|

Jul 24, 2009, 11:27 PM // 23:27

Jul 24, 2009, 11:27 PM // 23:27

|

#31 |

|

über těk-nĭsh'ən

Join Date: Jan 2006

Location: Canada

Profession: R/

|

i basically want the card to underclock itself whenever i open GW. let's face it, i don't need my 4890 running 900/1000 to maintain 60 fps in GW. heck, my old 4850, with its original buggy vbios, was running GW flawlessly with it stuck in "low power 3D" for a month before i noticed.

|

|

|

Jul 25, 2009, 12:08 AM // 00:08

Jul 25, 2009, 12:08 AM // 00:08

|

#32 |

|

Krytan Explorer

Join Date: Jan 2007

Location: Ohio, usa

Guild: none

Profession: Mo/

|

I agree quite a bit with that, as that's one thing i missed when i got this 4850. The simple to use per application profiles. I don't see why ati can't make that.

|

|

|

Jul 25, 2009, 04:32 PM // 16:32

Jul 25, 2009, 04:32 PM // 16:32

|

#33 |

|

Technician's Corner Moderator

Join Date: Jan 2006

Location: The TARDIS

Guild: http://www.lunarsoft.net/ http://forums.lunarsoft.net/

|

I've found nvidia's "control panel" to be a a piece of junk. Every system I've seen it on causes the system to lag when opening. Have yet to see that issue when opening ATI's Control Panel, and CCC works quite well, even on old systems.

|

|

|

Jul 25, 2009, 05:03 PM // 17:03

Jul 25, 2009, 05:03 PM // 17:03

|

#34 |

|

Core Guru

Join Date: Feb 2005

|

With ATI, you can just install the display drivers too. So if you don't really use the CCC for anything, there is no point installing it.

|

|

|

Jul 25, 2009, 07:40 PM // 19:40

Jul 25, 2009, 07:40 PM // 19:40

|

#35 | |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Quote:

It pools system information before it opens. That causes that delay. Unfortunately, no way around that.

__________________

|

|

|

|

Jul 25, 2009, 10:45 PM // 22:45

Jul 25, 2009, 10:45 PM // 22:45

|

#36 |

|

Technician's Corner Moderator

Join Date: Jan 2006

Location: The TARDIS

Guild: http://www.lunarsoft.net/ http://forums.lunarsoft.net/

|

There is, it's called async threading and better programming; something nvidia is lacking ,just look at those horrid drivers.

|

|

|

Jul 26, 2009, 05:02 AM // 05:02

Jul 26, 2009, 05:02 AM // 05:02

|

#37 |

|

The Fallen One

Join Date: Dec 2005

Location: Oblivion

Guild: Irrelevant

Profession: Mo/Me

|

Our drivers are very well programmed. There are so many factors involved with modern driver releases, and laziness on the game developer and OS developer side didn't help drivers in the last few years. Suffice to say, Windows7 may change that a bit (but that is totally up to MSFT not being complete morons this time around)

__________________

|

|

|

Jul 26, 2009, 07:04 AM // 07:04

Jul 26, 2009, 07:04 AM // 07:04

|

#38 | |

|

Core Guru

Join Date: Feb 2005

|

Quote:

nVidia has still yet to complete 1 generation of 40nm, so they are a little behind in that aspect, which is why they are having the 6 month delay in release. http://service.futuremark.com/hardware Due to nVidia's huge die size, I think once again they will lose this generation. In fact there was a great article written by an nVidia engineer on how they learned from the GT200 series and that having a huge die size is actually a bad thing, but he also said GPU's are engineered in 3-term sets, so the GT300 was actually being engineered before the GT200 was ever really released. So they found out too late that large die sizes are detrimental to the performance & success of your chip. 4890 price: $195 GTX 280 price: $345 Both cards are pretty similar in performance. The GTX 280 die size and large memory bus-width is what drives the cost up. Large die sizes have poor yields, and cost more to manufacture, so you lose money exponentially. There are 2 ways to increase memory bandwidth; Place more physical memory chips on your PCB so you can access more of them at once; Increase the speed in which the memory runs at. nVidia decided to use a 512-bit bus width to access 16 64mb GDDR3 chips. Each chip has 32 pins on it, so the GPU itself needs 512 pins to hook up to all 16 chips. This increases the size and complexity of the GPU. This gave it a total of 1024mb of memory at 140gb/s bandwidth. ATI decided to build a smaller, cheaper, simpler, easier to engineer chip with a 256-bit bus-width. This means it's die size was less than half that of the nVidia, but also meant it could only get half the memory bandwith. This is why ATI used GDDR5. GDDR5 gets twice the bandwidth as GDDR3 and 4. So in the end, both cards get roughly the same memory bandwidth, except the ATI GPU costs far less to manufacture, it's smaller, and ATI can put 2 and even 3 GPU's on a single PCB to increase performance for enthusiasts. |

|

|

|

Jul 26, 2009, 10:40 PM // 22:40

Jul 26, 2009, 10:40 PM // 22:40

|

#39 | |

|

Technician's Corner Moderator

Join Date: Jan 2006

Location: The TARDIS

Guild: http://www.lunarsoft.net/ http://forums.lunarsoft.net/

|

Stop lying, nvidia's drivers are horrid.

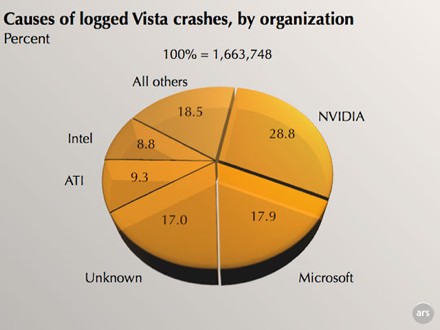

A bit old, but still very true:  Quote:

|

|

|

|

Jul 27, 2009, 01:13 AM // 01:13

Jul 27, 2009, 01:13 AM // 01:13

|

#40 |

|

Core Guru

Join Date: Feb 2005

|

Ahah that's gotta be all those laptops with nVidia solder joint problems they kept lying about, and INQ busted them using an electron microscope or something. Apple also canceled their contract and is trying to sue for their money back, since nVidia basically bricked an entire generation of Apple laptops.

|

|

|

|

|

«

Previous Thread

|

Next Thread

»

| Thread Tools | |

| Display Modes | |

|

|

All times are GMT. The time now is 05:19 AM // 05:19.

Linear Mode

Linear Mode